MIT Team Tests Object-Identifying Algorithm for the Visually Impaired

Of all of the conditions that technological innovation has the potential to mitigate, assuage, and aid, vision loss leaves plenty of room for the novel and life-changing.

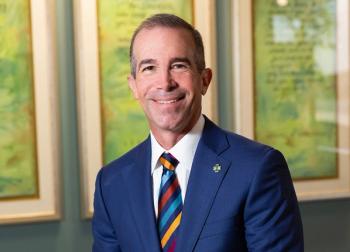

A visually impaired man uses the experimental system to locate an unoccupied chair. Screenshots courtesy of the researchers:

Of all of the conditions that technological innovation has the potential to mitigate, assuage, and aid, vision loss leaves plenty of room for the novel and life-changing.

While ophthalmology hacks away at reversing the underlying conditions that cause blindness, and tech and neurology dream of someday linking an artificial seeing device into the proper channels of the brain, others have been leveraging basic, existing devices and concepts to pose ways to make life easier for the visually impaired. An example would be a device trumpeted at the American Academy of Ophthalmology’s 2016 conference last year, a camera/voice generator/earpiece device that can clip to a pair of glasses and literally read text to the wearer with surprising accuracy.

White canes have long been used by the blind and visually impaired to feel out the path before them: their drawbacks are pretty obvious, considering that they occupy a hand of the user and often can tap into other people and objects. Researchers at Massachusetts Institute of Technology are now working on a system that may aid in navigation, using a worn depth camera and a haptic feedback belt.

In a

The device does have a little nuance. As much as having a usefulness for object avoidance, it can be programmed to find an object such as a chair and figure out if the chair is occupied, dependent on the surfaces being parallel to the ground and falling within a defined height limit. The algorithm determines the ground plane and from there is able to orient to other objects in the area, providing an understanding of roughly what they are.

The system was tested on 15 blind subjects, all of whom typically navigate with a white cane, who were subjected to various chair-finding exercises and indoor mazes. “For every task, the use of the system decreased the number of collisions compared to the use of a cane only,” the study concluded, with an accompanying press release noting a decrease in non-chair object collisions by 80%, and cane collisions with other people by 86% when compared to white cane use alone.

The work is obviously quite early, a testing of an algorithm rather than the development of a product. Still, it is novel, and perhaps someday down the line it will inform a more dynamic system to keep the seat warm for that distant artificial eye.