Laying the groundwork for smarter AI in clinical care: 4 essentials for a thoughtful strategy | Viewpoint

Careful planning can improve the chances of reaping the benefits of AI, without piling more on already‑stretched teams.

Healthcare leaders are all‑in on AI.

In one global survey,

So what’s worth adopting—and how do you roll it out without piling more on already‑stretched teams? Here’s a practical roadmap built around four essentials.

1. Start with high-Impact, proven use cases

Begin where the pain is sharpest and the evidence is strongest: work that drains time and morale. Administrative burden and burnout cost U.S. health systems an estimated

By offloading that work to agentic AI, clinicians and staff claw back valuable time. At the same time, hospitals and health systems can reduce no-shows, speed up approvals, and, most critically, improve patient outcomes.

2. Balance short-term wins with long-term transformation

Pilots and point solutions matter. But they won’t cure structural problems like fragmented data and aging systems. More than

About a third lose nearly an hour per shift. That adds up to a staggering 23 workdays per clinician each year. In the short term, AI can help by filling some of these gaps (like integrating data from multiple sources or flagging missing info). Ultimately, though, this requires modernizing IT infrastructure and phasing out systems that trap data in silos.

In parallel, modernize data architecture and interoperability so future capabilities have a sturdy foundation. Treat AI as part of a broader digital transformation—not a scatter of apps. With that foundation, comprehensive integration by 2030 could automate much of the administrative load and, in turn,

3. Hire for transformation, not tradition

Technology falters without the right leadership. As health systems expand their AI initiatives, success depends on leaders who can bridge clinical needs with technical innovation and drive process change that leverages the best of human and AI capabilities.

Look for hybrid talent that blends clinical understanding with product sense, data fluency, agile ways of working, and human‑centered design. Consider nontraditional candidates from tech, finance, or other fast‑moving sectors to complement internal clinical expertise, and create roles that can steer AI strategy across silos.

Many organizations are also partnering with technology companies and hyperscale cloud providers rather than building everything in‑house. In one survey,

Just as important, stand up governance early. A clear AI governance framework keeps efforts aligned with clinical priorities, sets safety and ethics guardrails, and forces attention to measurable outcomes. Finally, leading organizations are building out high-reliability, AI-enabled systems with the help of collaborative learning programs that bring

4. Break through cultural resistance to change

The “culture of no” is real. New tools fail when the people who must use them don’t trust them.

Bring physicians, nurses, and other end users into the process from the start—co‑design workflows, pilot in real settings, and run feedback loops that actually change the product.

Address legitimate concerns head‑on: accuracy, bias, and accountability.

Making AI work: A practical path forward

A smarter AI strategy starts small, thinks long, and stays human.

Focus first on clear‑value use cases—especially those that relieve administrative strain and burnout. In parallel, invest in the plumbing: interoperable data, modernized infrastructure, and disciplined governance.

Recruit leaders who can bridge medicine and technology, and equip them to steward change with transparency and rigor. And bring clinicians into the process early so AI becomes a helping hand, not another burden.

Do that, and the benefits arrive in two waves. Near term, you streamline operations, reduce no‑shows and bottlenecks, and return time to caregivers. Over time, you create the conditions to safely adopt more advanced capabilities as they mature because the data pipelines, guardrails, and culture are ready.

There will be skepticism to overcome, processes to re‑engineer, and legacy systems to unwind. But with a grounded plan, AI becomes a practical engine for better patient care and a more sustainable health system—rather than the latest hype cycle.

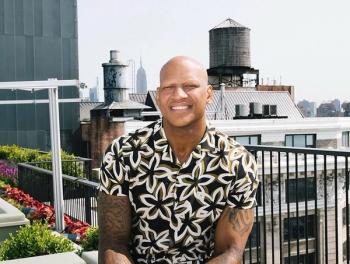

Angela Adams is CEO at Inflo Health.