The Dystopian Concerns of AI for Healthcare

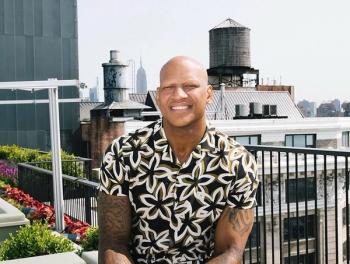

John Mattison, MD, likes science fiction. Today, he warned of the scenarios that could emerge from unchecked AI.

John Mattison, MD, likes science fiction. In a speech at the AI in Healthcare Summit today in Boston, he brought up Niven’s laws:

- Never fire a laser at a mirror.

- Giving up freedom for security is beginning to look naïve.

- It is easier to destroy than to create.

- Ethics change with technology.

- The only universal message in science fiction: There exist minds that think as well as you do but differently.

All (except the first) played a part, but the fourth in particular anchored his talk about the ethical challenges that artificial intelligence (AI) creates in healthcare. There are 4 major concerns, as he sees it: That AI will take jobs and dignity away from humans; that autonomous devices could be harnessed by bad actors and become a physical threat to humans; that unintentional bias becomes a part of AI systems; and that bias will be intentionally programmed into the technology.

The luddite replacement scenario is well known, and some groups are trying to harness blockchain to avoid the human-extermination Terminator scenario. Mattison placed a particular concern on bias.

Predictive technologies meant to determine who was most likely to commit a crime have already been shown to unfairly flag African Americans. He said in healthcare, similar problems could be manifested. Technologies could incorrectly filter out certain people from receiving certain treatments based on previous and potentially biased evidence, denying them access to interventions that might have actually helped them.

Intentional bias, according to Mattison, is “really quite scary.” Similar to the

“If we don’t consciously, deliberately, actively, explicitly, vocally look out for the rights of the marginalized, they will suffer in incredible and unrecognized ways,” he said. “The only way to overcome it is to be aware.”